Artificial Intelligence Snake Oil Or Powerful Nonprofit Tool

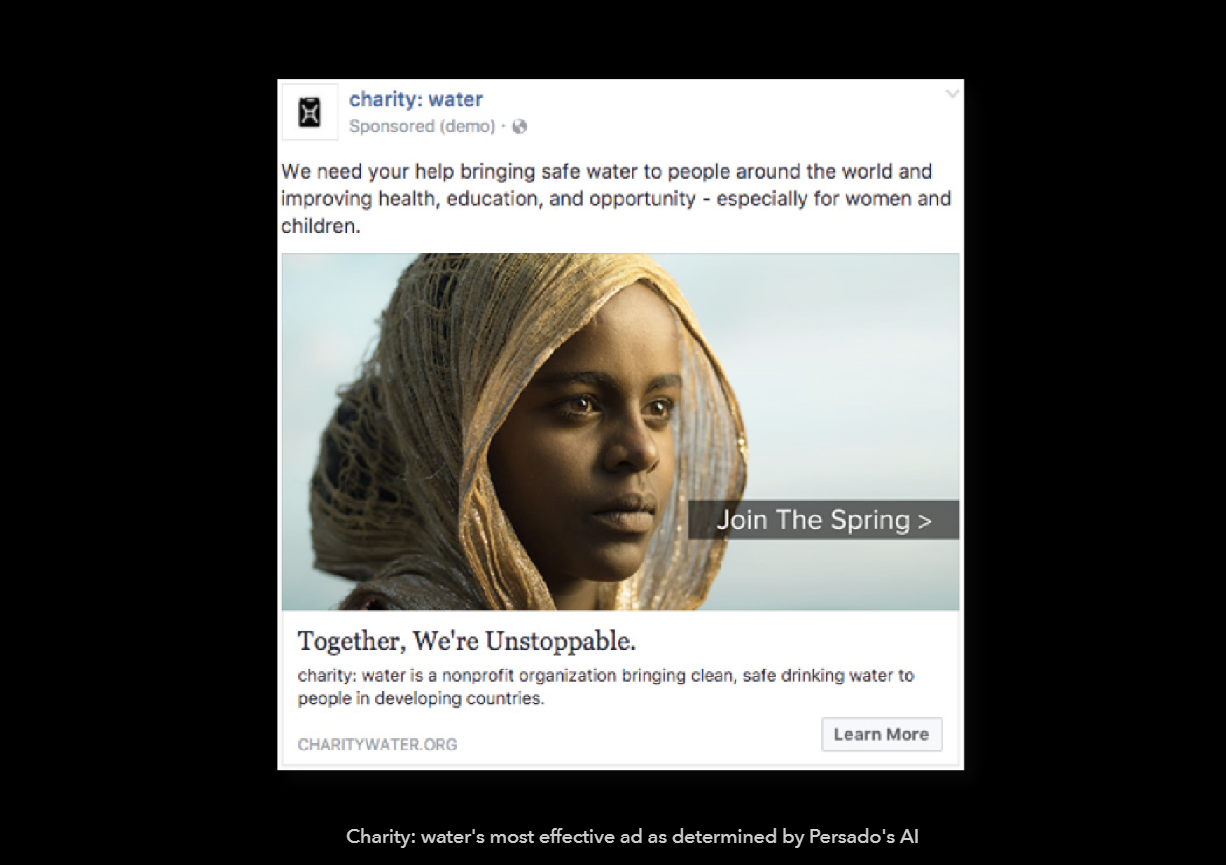

Artificial Intelligence Snake Oil Or Powerful Nonprofit Tool Can nonprofits change hearts and minds in the advocacy and political arenas by adding artificial intelligence (ai) tools to their online organizing toolbox? or is ai just another form of nonprofit snake oil with no real impact? spoiler: if you use it right, it can be a powerful tool to enhance your fundraising and advocacy. first step?. How to recognize ai snake oil arvind narayanan associate professor of computer science @random walker much of what’s being sold as “ai” today is snake oil — it does not and cannot work. why is this happening? how can we recognize flawed ai claims and push back?.

Ai Snake Oil Princeton University Press The acclaimed new book “ai snake oil” by princeton ai scholars arvind narayanan and sayash kapoor offers readers a smart new perspective, with surprising insights on what should excite people most, and what should alarm us. The ai snake oil is an essential read for anyone interested in ai’s real world impact. narayanan and kapoor offer a refreshing counterpoint to both ai evangelists and doomsayers, advocating for a balanced and evidence based approach to understanding artificial intelligence. By revealing ai's limits and real risks, ai snake oil will help you make better decisions about whether and how to use ai at work and home. Learn how vendors game the accuracy of ai predictions to attract investors and buyers. recognize that ai models evaluated on data they had been trained on is a way of “teaching to the test.” additionally, learn how “priming bias” is introduced when past exposure to a concept leads to overemphasizing its importance in future decisions.

Ai Snake Oil Princeton University Press By revealing ai's limits and real risks, ai snake oil will help you make better decisions about whether and how to use ai at work and home. Learn how vendors game the accuracy of ai predictions to attract investors and buyers. recognize that ai models evaluated on data they had been trained on is a way of “teaching to the test.” additionally, learn how “priming bias” is introduced when past exposure to a concept leads to overemphasizing its importance in future decisions. The book explains the crucial differences between types of ai, why organizations are falling for ai snake oil, why ai can't fix social media, why ai isn't an existential risk, and why we should be far more worried about what people will do with ai than about anything ai will do on its own. Tl;dr: ai has been a major focus at ces 2025, but experts from princeton university, arvind narayanan and sayash kapoor, warn against deceptive marketing practices labeled as 'ai snake oil.'. In their book ai snake oil, two princeton researchers pinpoint the culprits of the ai hype cycle and advocate for a more critical, holistic understanding of artificial intelligence. The book explains the crucial differences between types of ai, why organizations are falling for ai snake oil, why ai can’t fix social media, why ai isn’t an existential risk, and why we should be far more worried about what people will do with ai than about anything ai will do on its own.

Aiブームから逃れられないなら 知識を身につけ冷静に対応しよう Wired Jp The book explains the crucial differences between types of ai, why organizations are falling for ai snake oil, why ai can't fix social media, why ai isn't an existential risk, and why we should be far more worried about what people will do with ai than about anything ai will do on its own. Tl;dr: ai has been a major focus at ces 2025, but experts from princeton university, arvind narayanan and sayash kapoor, warn against deceptive marketing practices labeled as 'ai snake oil.'. In their book ai snake oil, two princeton researchers pinpoint the culprits of the ai hype cycle and advocate for a more critical, holistic understanding of artificial intelligence. The book explains the crucial differences between types of ai, why organizations are falling for ai snake oil, why ai can’t fix social media, why ai isn’t an existential risk, and why we should be far more worried about what people will do with ai than about anything ai will do on its own.

Comments are closed.