Comfyui Animatediff Instance Diffusion Workflow Comfyui

Comfyui Animatediff Workflow Stable Diffusion Animation Animatediff in comfyui is an amazing way to generate ai videos. in this guide i will try to help you with starting out using this and give you some starting workflows to work with. my attempt here is to try give you a setup that gives you a jumping off point to start making your own videos. Learn how to use animatediff in comfyui for animation, run animatediff workflow for free, and explore the features of animatediff v3, sdxl, and v2.

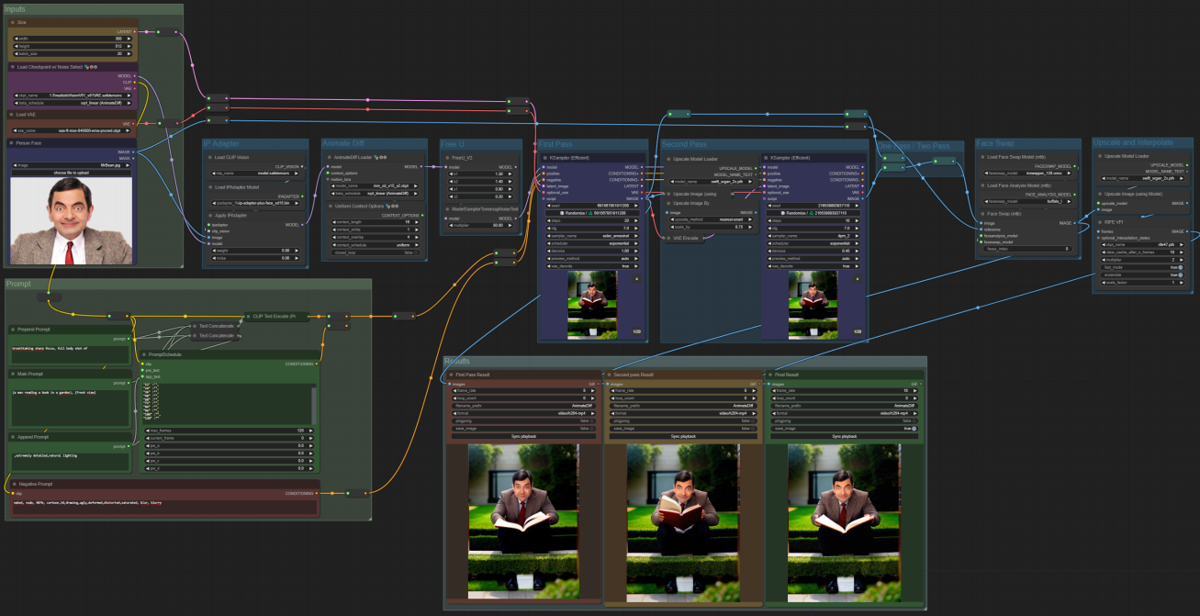

Comfyui Animatediff Workflow Stable Diffusion Animation Animatediff is an extension, or a custom node, for stable diffusion. it's available for many user interfaces but we'll be covering it inside of comfyui in this guide. it can create coherent animations from a text prompt, but also from a video input together with controlnet. All of these use kosinkadink’s comfy extension if you're getting started, check out the intro at the top of his repo for the basics. i'd also encourage you to download comfy manager to manage dependancies. now, on the workflows!. This workflow allows you to segment up to 8 different elements from an input image, or video, into colored masks for ipa to stylize and animate each element with. first, select your source input mode in the middle with the switch. 1 is image mode, 2 is video mode. Comfyui nodes to use instancediffusion. original research repo: github frank xwang instancediffusion. clone or download this repo into your comfyui custom nodes directory. there are no python package requirements outside of the standard comfyui requirements at this time.

Comfyui Animatediff And Ip Adapter Workflow Stable Diffusion Animation This workflow allows you to segment up to 8 different elements from an input image, or video, into colored masks for ipa to stylize and animate each element with. first, select your source input mode in the middle with the switch. 1 is image mode, 2 is video mode. Comfyui nodes to use instancediffusion. original research repo: github frank xwang instancediffusion. clone or download this repo into your comfyui custom nodes directory. there are no python package requirements outside of the standard comfyui requirements at this time. Animatediff is an extension, or a custom node, for stable diffusion. it's available for many user interfaces but we'll be covering it inside of comfyui in this guide. it can create coherent animations from a text prompt, but also from a video input together with controlnet. This workflow is created to demonstrate the capabilities of creating realistic video and animation using animatediff v3 and will also help you learn all the basic techniques in video creation using stable diffusion. The workflow includes a settings module, lora for animate lcm, a stable diffusion 1.5 checkpoint, and a vae. abe emphasizes the importance of limiting resolution to 512 due to the stable diffusion 1.5 model and discusses the batch size and motion scale. We have developed a lightweight version of the stable diffusion comfyui workflow that achieves 70% of the performance of animatediff with rave. this means that even if you have a lower end computer, you can still enjoy creating stunning animations for platforms like shorts, tiktok, or media advertisements.

Comfyui Animatediff And Ip Adapter Workflow Stable Diffusion Animation Animatediff is an extension, or a custom node, for stable diffusion. it's available for many user interfaces but we'll be covering it inside of comfyui in this guide. it can create coherent animations from a text prompt, but also from a video input together with controlnet. This workflow is created to demonstrate the capabilities of creating realistic video and animation using animatediff v3 and will also help you learn all the basic techniques in video creation using stable diffusion. The workflow includes a settings module, lora for animate lcm, a stable diffusion 1.5 checkpoint, and a vae. abe emphasizes the importance of limiting resolution to 512 due to the stable diffusion 1.5 model and discusses the batch size and motion scale. We have developed a lightweight version of the stable diffusion comfyui workflow that achieves 70% of the performance of animatediff with rave. this means that even if you have a lower end computer, you can still enjoy creating stunning animations for platforms like shorts, tiktok, or media advertisements.

Comfyui Animatediff Workflow Fast Clone Civitai The workflow includes a settings module, lora for animate lcm, a stable diffusion 1.5 checkpoint, and a vae. abe emphasizes the importance of limiting resolution to 512 due to the stable diffusion 1.5 model and discusses the batch size and motion scale. We have developed a lightweight version of the stable diffusion comfyui workflow that achieves 70% of the performance of animatediff with rave. this means that even if you have a lower end computer, you can still enjoy creating stunning animations for platforms like shorts, tiktok, or media advertisements.

Comments are closed.