Comfyui Vid To Vid Animatediff Workflow Part 1

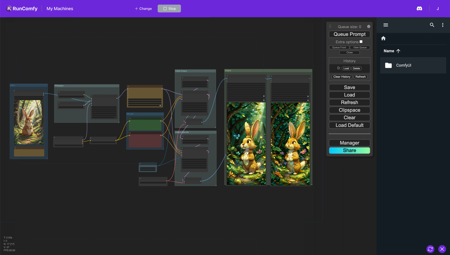

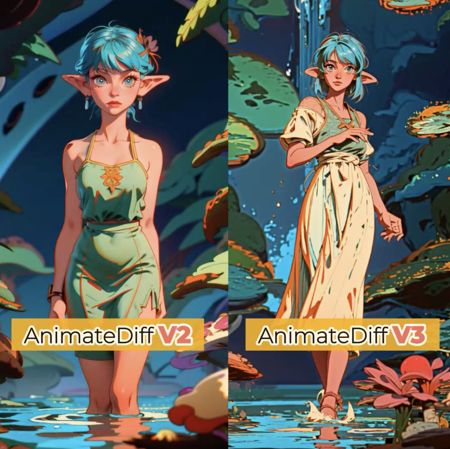

Comfyui Animatediff Workflow Fast Clone Civitai Epicrealism checkpoint: civitai models 25694?mode download ip adapter custom node and models from the comfyui manager. Animatediff in comfyui is an amazing way to generate ai videos. in this guide i will try to help you with starting out using this and give you some starting workflows to work with. my attempt here is to try give you a setup that gives you a jumping off point to start making your own videos.

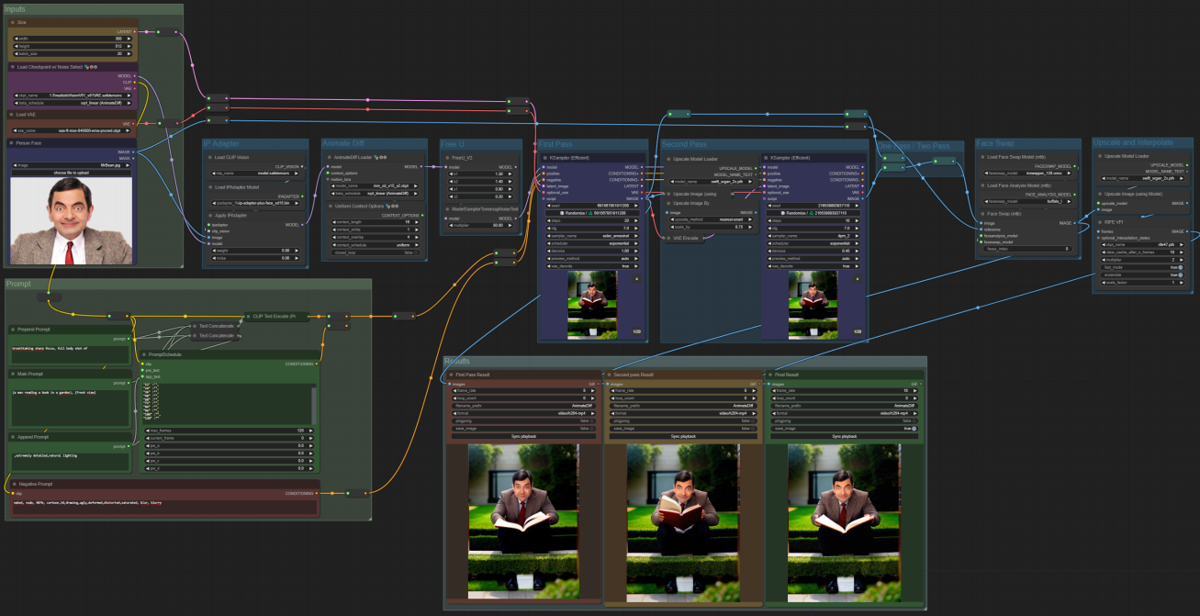

Comfyui Animatediff Workflow Fast Clone Civitai Since someone asked me how to generate a video, i shared my comfyui workflow. compared to the workflows of other authors, this is a very concise wo. How to use: 1 split your video into frames and reduce to the fps desired (i like going for a rate of about 12 fps) 2 run the step 1 workflow once all you need to change is put in where the original frames are and the dimensions of the output that you wish to have. (for 12 gb vram max is about 720p resolution). 👉 [creatives really nice video2video animations with animatediff together with loras and depth mapping and dws processor for better motion & clearer detection of subjects body parts] 👉 [load video, select checkpoint, lora & make sure you got all the control net models & animate diff models and hit que]. Damola, a digital artist demonstrates how to create a vid to vid animation using a comfyui workflow by innerreflections.stable diffusion comfyui workflows: h.

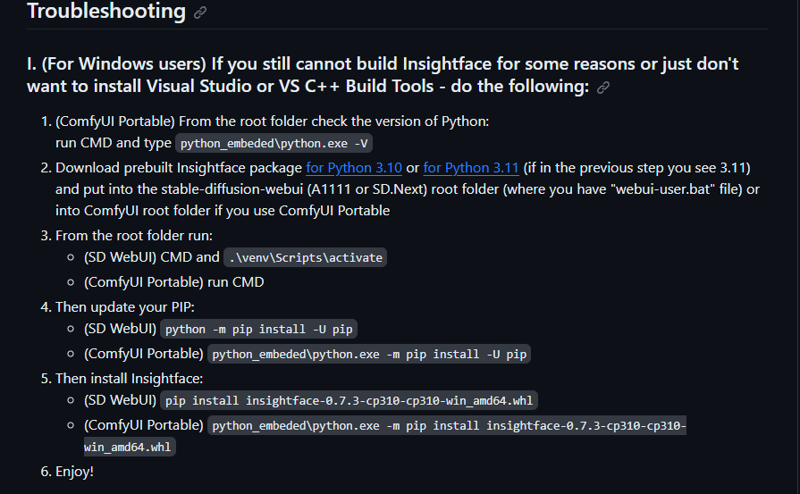

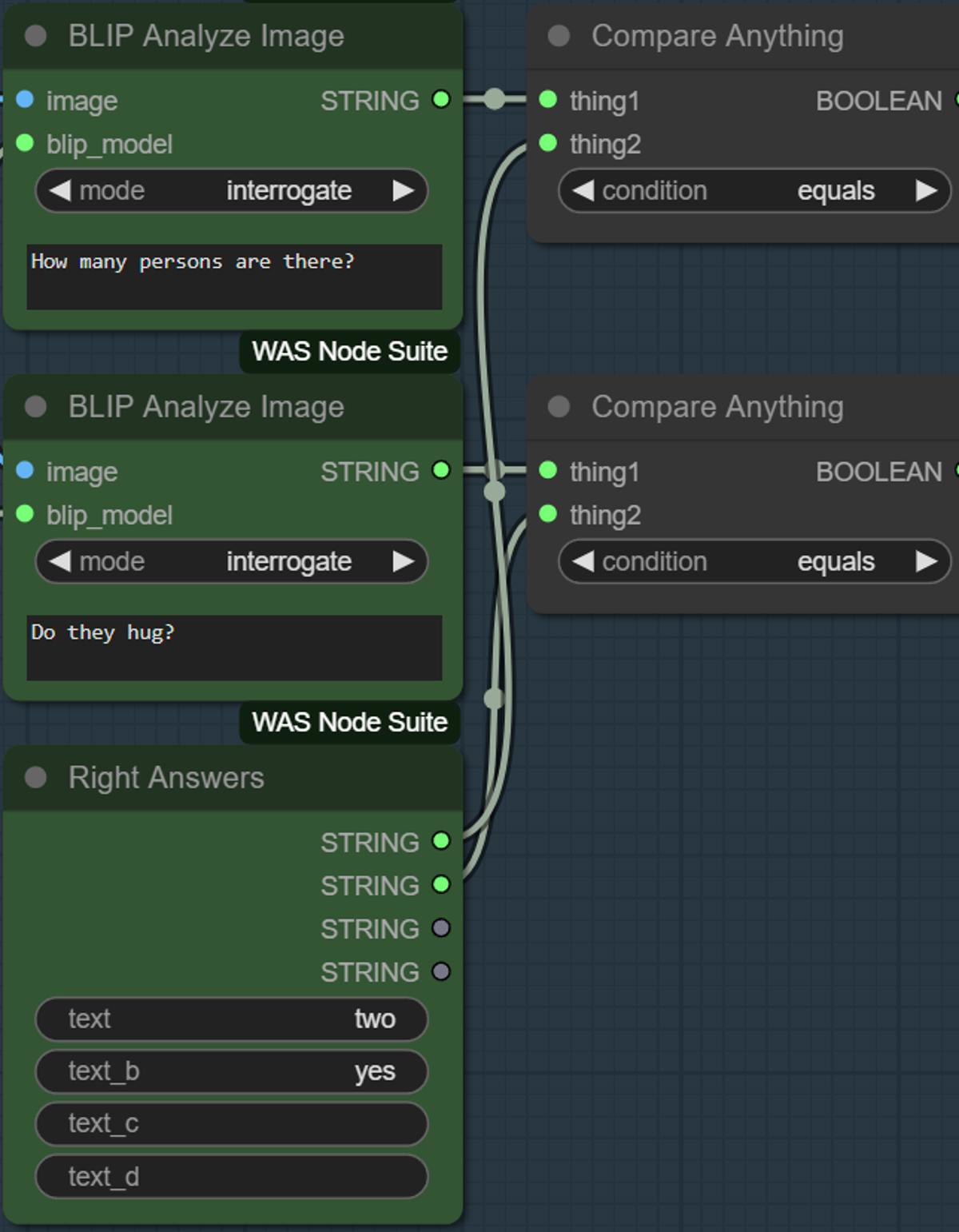

Automatically Keep Trying Comfyui Workflow V1 1 Stable Diffusion 👉 [creatives really nice video2video animations with animatediff together with loras and depth mapping and dws processor for better motion & clearer detection of subjects body parts] 👉 [load video, select checkpoint, lora & make sure you got all the control net models & animate diff models and hit que]. Damola, a digital artist demonstrates how to create a vid to vid animation using a comfyui workflow by innerreflections.stable diffusion comfyui workflows: h. How to use: 1 split your video into frames and reduce to the fps desired (i like going for a rate of about 12 fps) 2 run the step 1 workflow once all you need to change is put in where the original frames are and the dimensions of the output that you wish to have. (for 12 gb vram max is about 720p resolution). Here are all of the different ways you can run animatediff right now: with comfyui (comfyui animatediff) (this guide): my prefered method because you can use controlnets for video to video generation and prompt scheduling to change prompt throughout the video. I break down each node's process, using comfyui to transform original videos into amazing animations, and use the power of control nets and animate diff to bring your creations to life.

Comfyui Workflow Animatediff Ipadapter V1 0 Stable Diffusion How to use: 1 split your video into frames and reduce to the fps desired (i like going for a rate of about 12 fps) 2 run the step 1 workflow once all you need to change is put in where the original frames are and the dimensions of the output that you wish to have. (for 12 gb vram max is about 720p resolution). Here are all of the different ways you can run animatediff right now: with comfyui (comfyui animatediff) (this guide): my prefered method because you can use controlnets for video to video generation and prompt scheduling to change prompt throughout the video. I break down each node's process, using comfyui to transform original videos into amazing animations, and use the power of control nets and animate diff to bring your creations to life.

Workflow Of Comfyui Animatediff Text To Animation Comfyui Cloud I break down each node's process, using comfyui to transform original videos into amazing animations, and use the power of control nets and animate diff to bring your creations to life.

Comments are closed.