Deepseek Ai Deepseek Coder 1 3b Base Hugging Face Eroppa

Deepseek Ai Deepseek Coder 1 3b Base Hugging Face Eroppa Massive training data: trained from scratch on 2t tokens, including 87% code and 13% linguistic data in both english and chinese languages. highly flexible & scalable: offered in model sizes of 1.3b, 5.7b, 6.7b, and 33b, enabling users to choose the setup most suitable for their requirements. This repo contains awq model files for deepseek's deepseek coder 1.3b base. these files were quantised using hardware kindly provided by massed compute. awq is an efficient, accurate and blazing fast low bit weight quantization method, currently supporting 4 bit quantization.

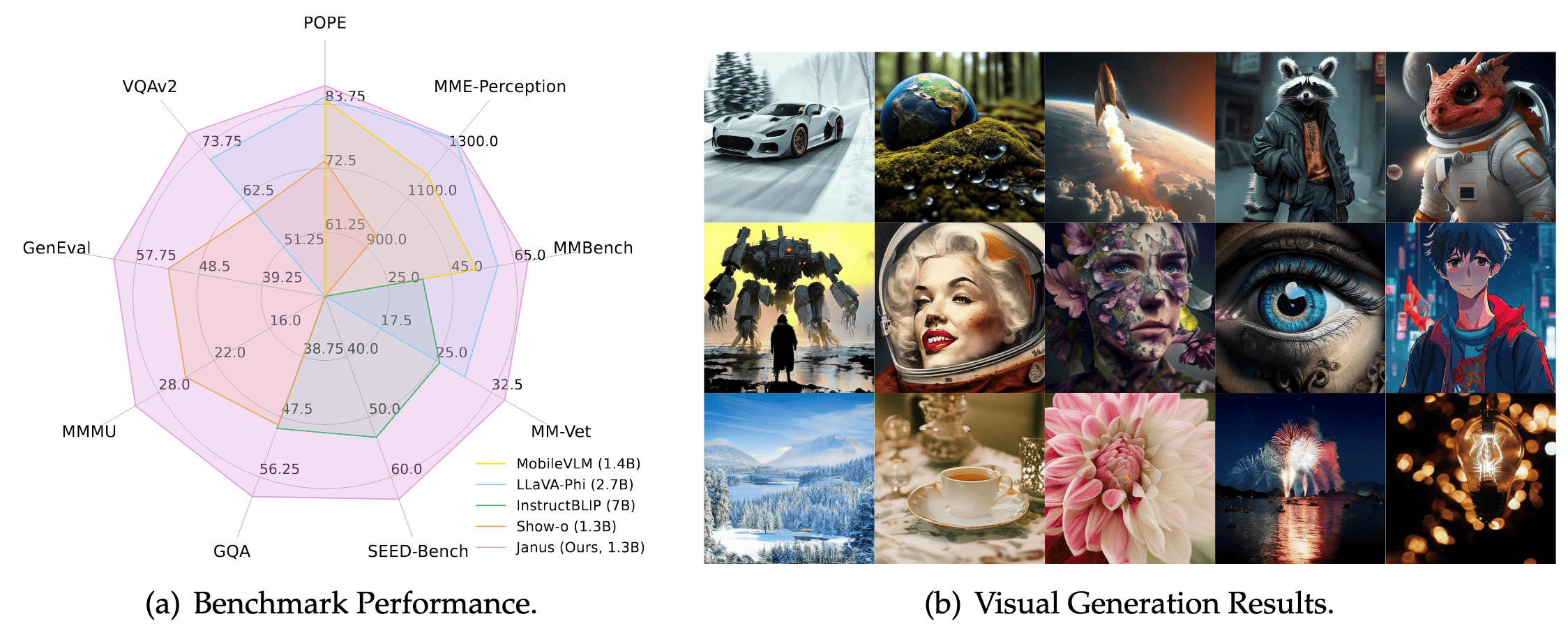

Deepseek Ai Deepseek Coder 1 3b Base Hugging Face Eroppa Deepseek ai deepseek coder 1 3b base hugging face our extensive evaluations demonstrate that deepseek coder not only achieves state of the art performance among open source code models across multiple benchmarks but also surpasses existing closed source models like codex and gpt 3.5. 汇聚87%代码与13%自然语言数据的deepseek coder,是基于2t训练 tokens 的编程语言模型,提供从1b至33b不同规模版本。 此1.3b基础模型凭借先进的代码补全与填充功能,为项目级代码编写提供卓越支持,引领开源代码模型性能新高度。. Deepseek ai deepseek coder 1 3b instruct hugging face 1. introduction of deepseek coder deepseek coder is composed of a series of code language models, each trained from scratch on 2t tokens, with a composition of 87% code and 13% natural language in both english and chinese. we provide various sizes of the code model, ranging from 1b to 33b. Created by deepseek ai, this model represents a breakthrough in code generation and understanding, as detailed in deepseek coder v2 breaking barrier closed source. the model processes text input for code completion, insertion, and project level tasks using a 16k token window size.

Deepseek Ai Deepseek Coder 1 3b Base Hugging Face Eroppa Deepseek ai deepseek coder 1 3b instruct hugging face 1. introduction of deepseek coder deepseek coder is composed of a series of code language models, each trained from scratch on 2t tokens, with a composition of 87% code and 13% natural language in both english and chinese. we provide various sizes of the code model, ranging from 1b to 33b. Created by deepseek ai, this model represents a breakthrough in code generation and understanding, as detailed in deepseek coder v2 breaking barrier closed source. the model processes text input for code completion, insertion, and project level tasks using a 16k token window size. Mcanoglu deepseek ai deepseek coder 1 3b base finetuned defect 1. introduction of deepseek coder deepseek coder is composed of a series of code language models, each trained from scratch on 2t tokens, with a composition of 87% code and 13% natural language in both english and chinese. Text generation transformers pytorch safetensors llama conversational text generation inference license:deepseek model card filesfiles and versions community 8 train deploy use this model main deepseek coder 1.3b instruct ctrl k ctrl k 8 contributors history:27 commits guoday sfconvertbot adding `safetensors` variant of this model (#2) e063262. Deepseek vl 1.3b chat is a tiny vision language model. it uses the siglip l as the vision encoder supporting 384 x 384 image input and is constructed based on the deepseek llm 1.3b base which is trained on an approximate corpus of 500b text tokens. the whole deepseek vl 1.3b base model is finally trained around 400b vision language tokens. Deepseek coder # context length: 16384 model name: deepseek coder languages: en, zh abilities: generate description: deepseek coder is composed of a series of code language models, each trained from scratch on 2t tokens, with a composition of 87% code and 13% natural language in both english and chinese. specifications # model spec 1 (pytorch, 1 3 billion) # model format: pytorch model size.

Deepseek Ai Deepseek Coder V2 Base Hugging Face Eroppa Mcanoglu deepseek ai deepseek coder 1 3b base finetuned defect 1. introduction of deepseek coder deepseek coder is composed of a series of code language models, each trained from scratch on 2t tokens, with a composition of 87% code and 13% natural language in both english and chinese. Text generation transformers pytorch safetensors llama conversational text generation inference license:deepseek model card filesfiles and versions community 8 train deploy use this model main deepseek coder 1.3b instruct ctrl k ctrl k 8 contributors history:27 commits guoday sfconvertbot adding `safetensors` variant of this model (#2) e063262. Deepseek vl 1.3b chat is a tiny vision language model. it uses the siglip l as the vision encoder supporting 384 x 384 image input and is constructed based on the deepseek llm 1.3b base which is trained on an approximate corpus of 500b text tokens. the whole deepseek vl 1.3b base model is finally trained around 400b vision language tokens. Deepseek coder # context length: 16384 model name: deepseek coder languages: en, zh abilities: generate description: deepseek coder is composed of a series of code language models, each trained from scratch on 2t tokens, with a composition of 87% code and 13% natural language in both english and chinese. specifications # model spec 1 (pytorch, 1 3 billion) # model format: pytorch model size.

Deepseek Ai Deepseek Coder 6 7b Base Hugging Face Eroppa Deepseek vl 1.3b chat is a tiny vision language model. it uses the siglip l as the vision encoder supporting 384 x 384 image input and is constructed based on the deepseek llm 1.3b base which is trained on an approximate corpus of 500b text tokens. the whole deepseek vl 1.3b base model is finally trained around 400b vision language tokens. Deepseek coder # context length: 16384 model name: deepseek coder languages: en, zh abilities: generate description: deepseek coder is composed of a series of code language models, each trained from scratch on 2t tokens, with a composition of 87% code and 13% natural language in both english and chinese. specifications # model spec 1 (pytorch, 1 3 billion) # model format: pytorch model size.

Deepseek Ai Deepseek Coder V2 Lite Base 能提供awq量化版本吗

Comments are closed.